On Y2King Myself, or "What Would Marie Kondo Do?"

- 2019/24/01

- 8 min read

This is a fun one and mostly a self-heckle. I’ll set the scene.

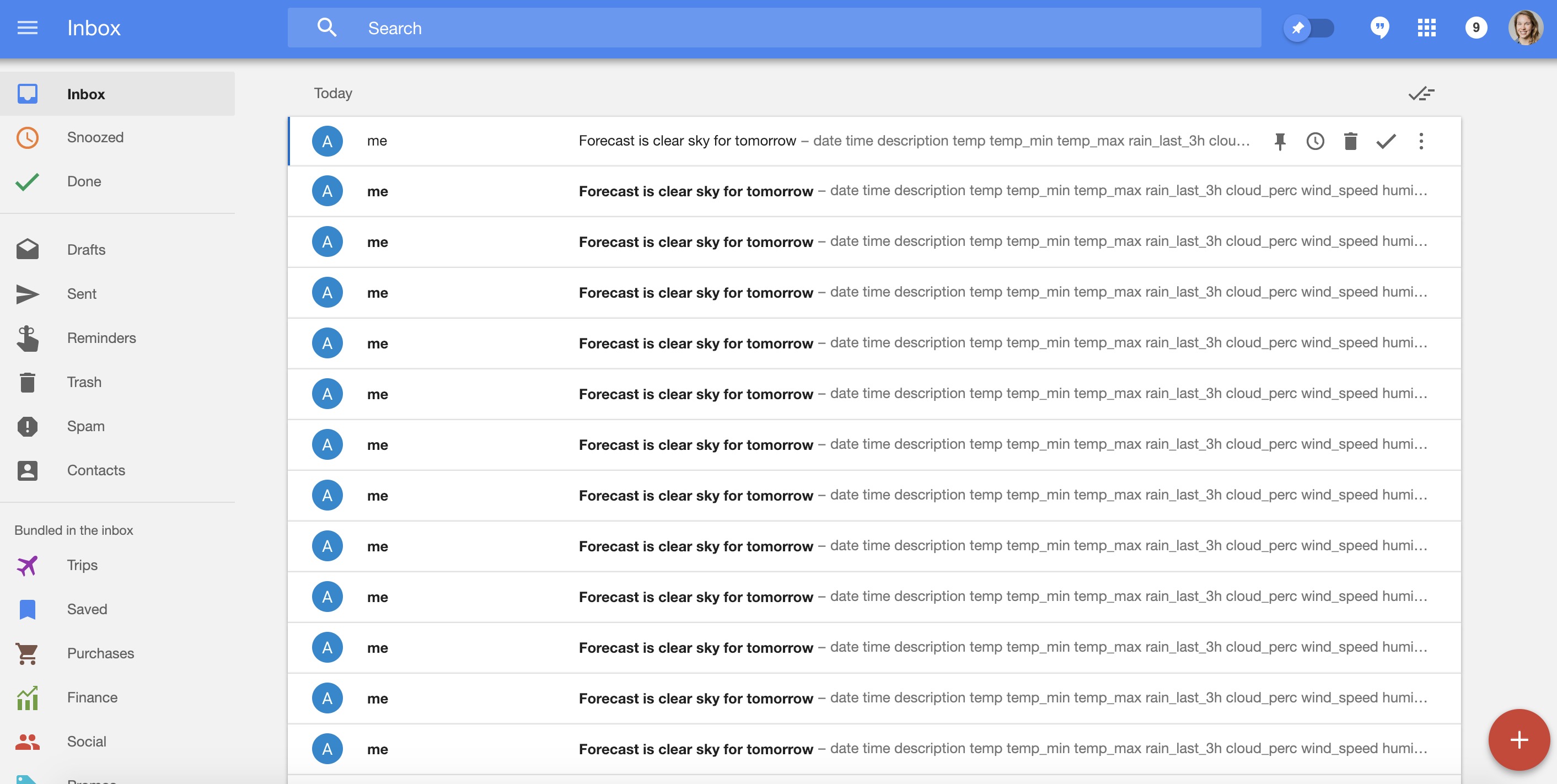

It’s Jan 1, 2019, the day after New Years Eve. I realize I’ve made it home with my phone. Nice. 2019 is off to a good start. I check it and notice a big, big notifications number on the Inbox app. Like 12,000+ kinda big.

Normally, I keep a pretty clean inbox. At any given time I’ll have max 3 or 4 unread emails because I aggressively archive new emails that don’t need attention and religiously unsubscribe from marketing emails1.

But over 12k emails…what did I do last night??

I hold my phone out at arm’s length and tap gingerly on the Inbox app icon, half believing that the weight of all those emails will short-circuit or just melt the thing down in front of my eyes. Who knows? My phone’s not used to that kind of Inbox stress! I’m sure it’s already got enough anxiety about being out of warranty now that it’s 2019 (that’s gotta mean middle-aged in phone years, plus all these news alerts do age one) and also always avoiding water and trying not to be dropped all the time. Must be stressful. Anyway, I digress.

My phone didn’t melt, bless her, but the scene I was met with was one of utter carnage. Thousands of emails from…myself.

It was what I believe the experts call a hot mess. But I had a pretty good hunch about what had happened. I’ll back up to this summer [tape rewinding sound effect].

New York weather isn’t known for being unpredictable, necessarily, so maybe it was a weird year or maybe I’m just bad at checking the weather. In any case, I’d been getting rained on a lot. This was doubly unwelcome as I was usually out with my laptop in my backpack and had to do the awkward shuffle-run back to shelter when these unexpected downpours happened.

After the third damp shuffle-run into the nearest bodega I had an idea. Why not email myself early in the morning when there’s a chance it’s going to rain that day? Then at least I’d give myself fair warning and pack an umbrella. (I’m well aware that there are better solutions to this predicament, such as acquiring Karen Smith’s fifth sense or using an actual app that already does this. But that would be too easy.)

So this is entirely your own fault, huh? Do go on

Setting up a script to email myself the weather every morning involved three main parts. Spinning up an RStudio server instance, grabbing the necessary weather data from somewhere, and emailing it to myself on a schedule.

As you may have guessed by now, the schedule was the part of this that went haywire (or rather, did exactly what it was supposed to) when the new year hit.

Fast forward to New Years Day 2019 [pause for VHS fastfowarding noises]. When I blearily logged into the RStudio server instance and listed out what the native Linux scheduler had (crontab -l) on record, I found that there were two (2) lines in the crontab file. One that would send me an email every morning before I woke up with the day’s weather. That’s the good one. The offending line I don’t totally remember leaving in, but I can see how it would have happened.

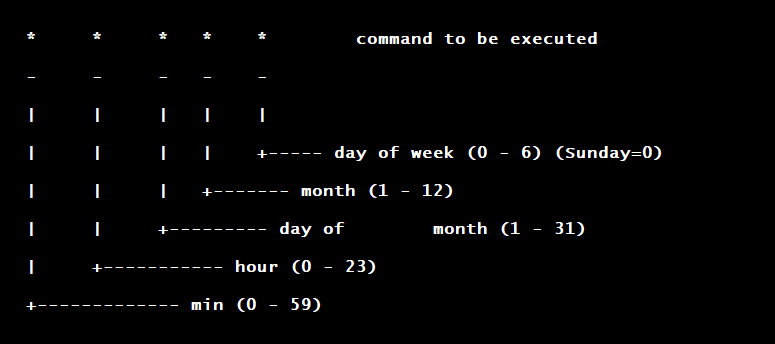

If you’re not familiar, cron uses a five field syntax to specify the schedule on which a particular script should be run. There’s a spot for minute, hour, day of month, month, and day of week.

When I was testing the emailing portion of the script out, I used a cron to send myself an email every minute, to check that things were working correctly. What I must have done when I was satisfied that things were working was added a new cron – the good one – and changed the old cron to operate in a different month than the current one (so far in the future it could barely even be contemplated!) while I tested that the new cron was working correctly.

Turns out, the month I changed it to was January. And oh boy did January start off with a bang. The server ran the whole script (which involves hitting an API each time) and shot off an email to me with the results, every minute on the minute for…quite a few hours.

Luckily, I valiantly slayed the bad old cron with some heroic backspaces and lived to tell the tale. If you’re interested in the other two parts of the project – setting up the server and grabbing the data – I’ll give a bit of color to both.

owmr, gmailr, and RStudio server

Okay so back to alternately sunny and rainy summertime in Brooklyn when I’m starting this weekend project of emailing myself the weather (all code here).

First order of business is to find a package that will get me a weather forecast for my neighborhood. I usually search for existing R packages that interface with APIs before I think about integrating with an API myself. Turns out a lot of weather APIs like Wunderground no longer provide free API keys 😔, making their associated R packages less attractive. Luckily there was one that fit most of my needs called owmr which plugs into OpenWeatherMap’s API. You create an API key (which you can now store as an environment variable, yay) and you’re good to go.

The main function I used, owmr::get_forecast(), lets you specify a zip code or city name and get temps, wind speeds, humidity, cloud coverage, aaaaaand rain, among other things, for every three hour period of a given day.

A good amount of the munging code I added to the pipeline after grabbing owmr data is now incorporated into the package via a couple pull requests, which I thought the package might benefit from.

Beyond that, most of what I had to do was degree conversion,

kelvin_to_fahrenheit <- function(x) {

(x - 273)*1.8 + 32

}

un_kelvin <- function(tbl) {

tbl %>%

mutate_at(vars(contains("temp")),

funs(kelvin_to_fahrenheit))

}light cleaning,

clean_raw <- function(tbl) {

tbl %>%

nix_mains() %>%

mutate(

date_time = dt_txt %>% lubridate::as_datetime(),

description = weather %>%

pluck_weather("description")

) %>%

rename(

rain_last_3h = rain_3h,

cloud_perc = clouds_all

) %>%

select(dt, date_time, description,

starts_with("temp"), -temp_kf, # an internal param

rain_last_3h, cloud_perc,

wind_speed, humidity

) %>%

un_kelvin()

}and quick summarizing.

summarise_weather <- function(tbl) {

tbl %>%

style_numeric() %>%

replace_na_df() %>%

mutate(

date_time = as.character(date_time)

) %>%

separate(date_time, into = c("date", "time"), " ") %>%

mutate(time = str_remove_all(time, ":[0-9:]+")) %>%

select(-dt) %>%

filter(date == lubridate::today() + 1) # Grab just tomorrow

}Next step was the emailing this summary to myself bit. Jim Hester’s gmailr is, unsurprisingly, very user-friendly. I recommend Jenny Bryan’s gmailr tutorial for a walkthrough of all the credentialing with Google that needs to happen before you can get going with the package.

This is the gist of what the emailing portion of the script looks like. The subject line contains the info I normally glance at every morning before archiving the email because if I didn’t mention before I usually keep a cleanish inbox except under certain unforeseen or dire circumstances no I’m not talking about anything in particular why do you ask?

msg <- mime() %>%

to(my_email) %>%

from(my_email) %>%

subject(glue("Forecast is {clean$description[2]} for tomorrow")) %>%

html_body(print(xtable(gist), type="html"))

send_message(msg)The final step of setting up a server, setting up git and SSH keys, and installing all the Linux dependencies (libcurl, openssl, etc.) to be able to install R packages (nothing depending on rjava this time 🙏) is, for me, usually finicky and a bit of a pain. There are so many good tutorials out there, though, and the convenience of having your IDE (with terminal tab!) in the cloud is worth it, imo. Plus once you’ve got something working it’s set it and forget it, you know, what could go wrong?

Marie Kondo is right as usual

In the end my personal Y2K2 scenario could have been much worse. I escaped relatively unscathed, with an AWS bill probably $20 higher than it is most months. But what better way to kick off 2019 than diffusing a literal ticking time bomb left by your past self?

There’s some broader lesson in here somewhere but mostly what I’m taking away is that one should Marie Kondo one’s repo every once in a while. If something isn’t being used at the current moment and/or doesn’t spark joy, it should probably be rm’d. Whether it be old bits of code that aren’t used anymore or old crons or random analysis detritus, we should rely on git to be able to dredge these dead weights up from the depths if we decide maybe we’ll give that old sweater one more shot. Or else unexpected things can happen.

The notable exception here is the Chubbies marketing emails which I usually get a kick out of. (They contain subject lines like (╯°□°)╯︵ ┻━┻ and random thought bubbles like “When will Sour Patch Kids finally grow up and decide to become Sour Patch adults? I’m tired of all these irresponsible candies and ready for one that can work a damn 9-to-5.”)↩

P.S. totally unrelated, but there’s a great podcast about the actual Y2K that I’d totally recommend.↩